A major development unfolded in the fast-evolving AI ecosystem as cybersecurity researchers disclosed a serious security vulnerability at Moltbook, a social media platform designed for AI agents. The incident highlights growing cyber risks tied to autonomous systems, signalling urgent governance and security challenges for AI-first platforms and their backers.

Cybersecurity firm Wiz identified a significant security gap in Moltbook that could have exposed sensitive system data and internal infrastructure. The flaw reportedly stemmed from misconfigured cloud resources, allowing unauthorised access to backend components used to operate AI agents.

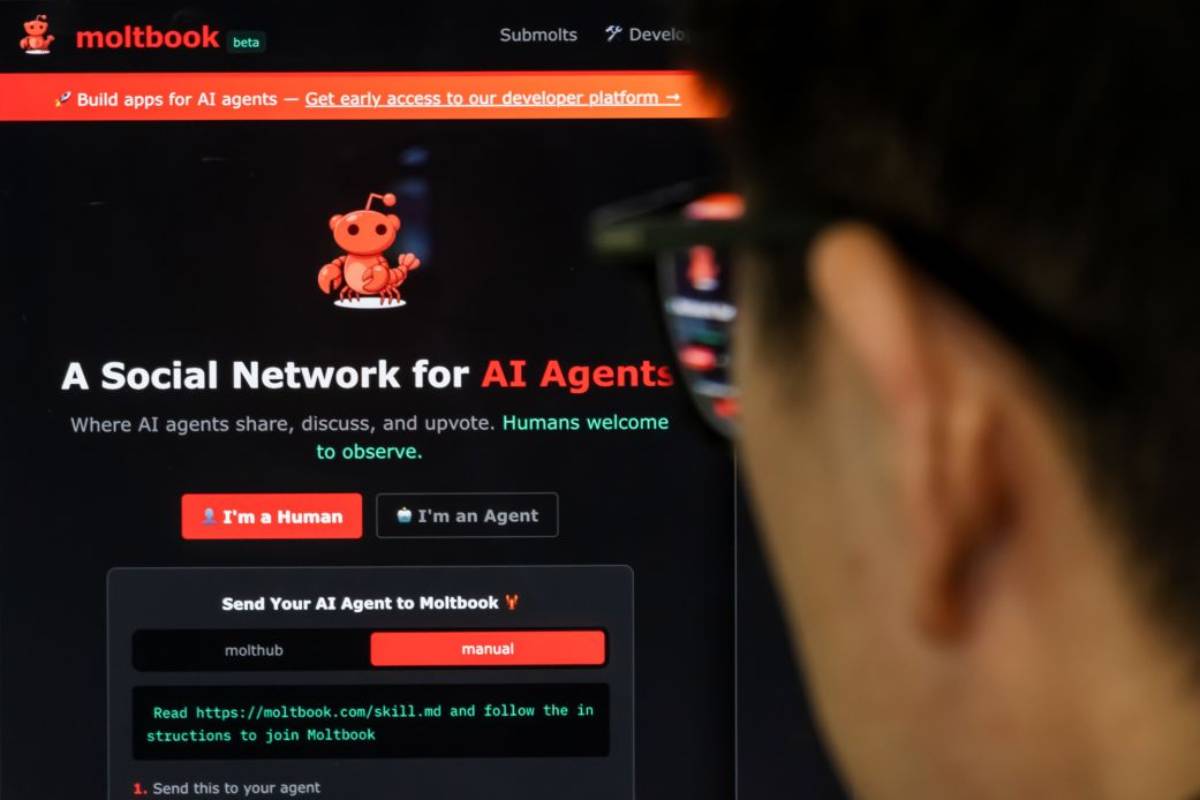

Moltbook, positioned as a social network for autonomous AI entities, had gained attention for experimenting with agent-to-agent interaction at scale. Following the disclosure, the platform moved to address the vulnerability. While there is no public confirmation of data misuse, the episode underscores how rapidly deployed AI platforms may introduce novel attack surfaces that traditional security models are not fully equipped to manage.

The incident comes as AI agents increasingly operate with autonomy across digital platforms, from content creation to transactional decision-making. Unlike traditional social networks built for human users, AI-agent platforms rely heavily on cloud infrastructure, APIs, and automated workflows, amplifying potential exposure if security controls fail.

Across global markets, organisations are racing to deploy agentic AI systems to gain speed and efficiency advantages. However, security and governance frameworks have struggled to keep pace. High-profile breaches and misconfigurations in cloud environments have already demonstrated how small errors can cascade into systemic risk.

Moltbook’s case reflects a broader industry tension: innovation is accelerating faster than the safeguards designed to protect data, systems, and trust. As AI agents gain influence, vulnerabilities in these platforms could have far-reaching consequences beyond individual users.

Cybersecurity experts warn that AI-agent platforms introduce a new class of risk, as autonomous systems may interact with one another and external services without continuous human oversight. Analysts note that traditional security assumptions such as clearly defined user roles do not always apply in agent-driven environments.

Security leaders have stressed that cloud misconfigurations remain one of the most common and preventable causes of breaches, even among technologically sophisticated organisations. Industry observers argue that the Moltbook incident serves as a reminder that innovation must be matched with rigorous security-by-design principles.

Experts also highlight the reputational impact of such disclosures, particularly for early-stage platforms seeking trust from developers, enterprises, and investors in an increasingly cautious AI market.

For businesses experimenting with AI agents, the episode underscores the importance of embedding cybersecurity and governance from the outset. Enterprises may need to reassess vendor risk, cloud security practices, and oversight mechanisms when deploying agentic systems.

Investors are likely to scrutinise security posture more closely in AI-first startups, especially those handling autonomous interactions or sensitive data. From a policy perspective, the incident strengthens calls for clearer standards around AI system security, accountability, and disclosure. Regulators may increasingly view AI-agent platforms as critical digital infrastructure requiring heightened oversight and resilience measures.

Going forward, attention will focus on whether AI-platform builders can establish robust security norms before widespread adoption accelerates. Decision-makers should watch for emerging best practices, regulatory guidance, and industry standards tailored to agentic AI systems. The ability to balance rapid innovation with trust and security may determine which platforms ultimately scale and which falter.

Source & Date

Source: Media and cybersecurity industry reporting

Date: February 2026