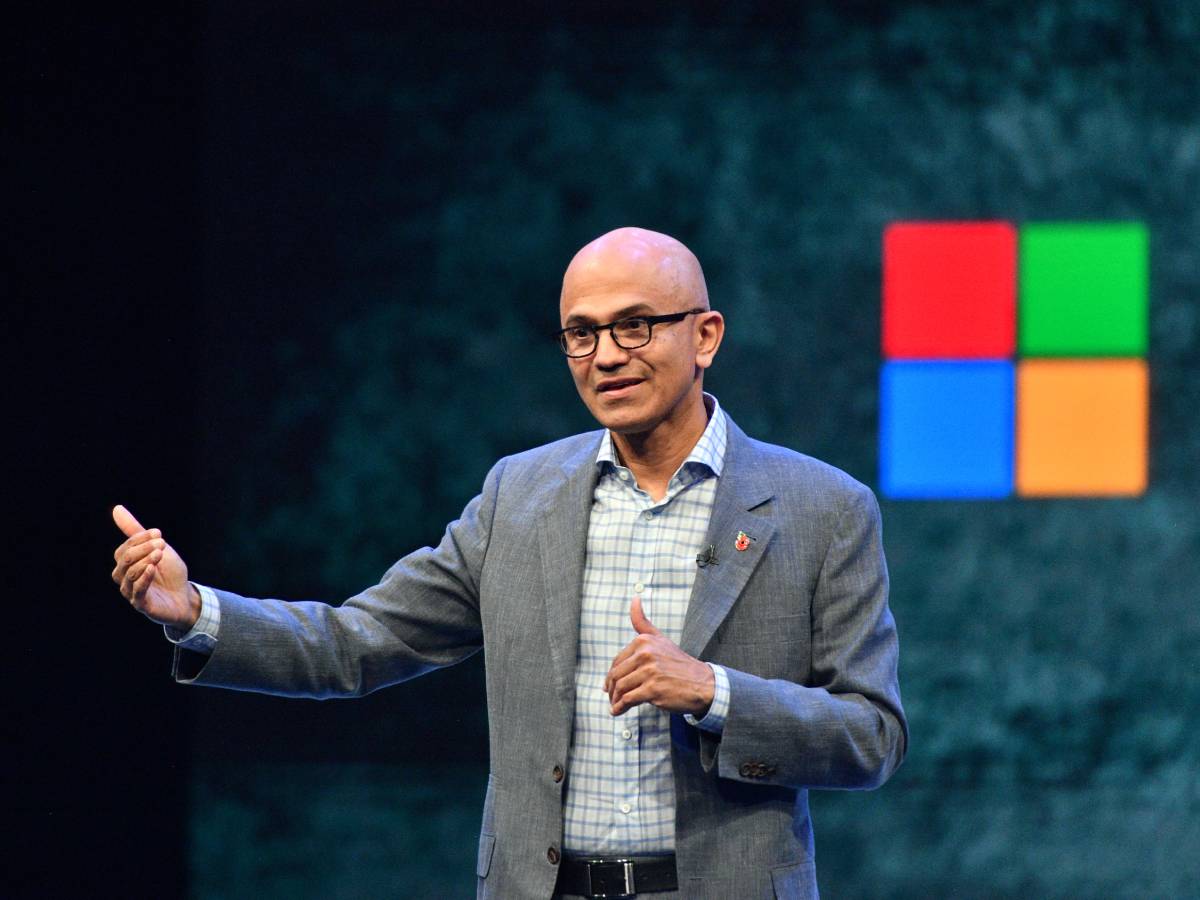

A major development unfolded as Microsoft CEO Satya Nadella issued a direct message to the global AI industry, urging companies to make artificial intelligence socially acceptable for both citizens and governments. The call signals a strategic shift toward responsible AI governance, with implications for global markets, regulation, and corporate leadership.

Satya Nadella emphasized that AI’s long-term success depends on trust, accountability, and alignment with public interest. He called on AI companies to embed responsibility, safety, and transparency into product design and deployment.

The message targets major AI developers, cloud providers, and platform companies that shape global digital infrastructure. Nadella highlighted the need for collaboration between governments and private companies to establish shared rules of engagement for AI systems.

The statement comes as regulatory pressure rises globally, with governments increasingly demanding ethical AI frameworks, explainability standards, and risk controls. The focus is shifting from pure innovation speed to legitimacy, governance, and public acceptance.

The development aligns with a broader trend across global markets where AI adoption is accelerating faster than regulatory and social trust frameworks. From Europe’s AI Act to emerging AI governance models in Asia and North America, governments are asserting control over how AI systems are built, trained, and deployed.

Historically, technological revolutions—from social media to cloud computing—scaled rapidly before regulatory structures matured, creating public backlash and policy intervention. AI now sits at a similar inflection point. Concerns around data privacy, job displacement, algorithmic bias, misinformation, and national security have made AI a political, social, and economic issue—not just a technological one.

For global corporations, AI is no longer just a productivity tool but a governance challenge. Corporate responsibility, regulatory compliance, and public trust are becoming core components of AI strategy alongside innovation and profitability.

Industry analysts view Nadella’s message as a recognition that AI legitimacy is becoming as important as AI capability. Experts argue that without trust frameworks, AI adoption risks regulatory clampdowns, public resistance, and fragmented global markets.

Policy experts highlight that governments are increasingly unwilling to accept “move fast and break things” approaches in AI development. Instead, they are demanding risk assessments, accountability mechanisms, and auditability.

Corporate leaders across sectors are beginning to echo similar concerns, emphasizing responsible AI, explainability, and human oversight. Market observers note that trust-based AI governance could become a competitive advantage, differentiating companies that can scale safely from those that face regulatory friction.

The consensus view is that AI companies must evolve from pure technology builders into institutional actors that operate within political, ethical, and societal frameworks.

For global executives, the shift could redefine operational strategies across AI development, deployment, and governance. Companies may need to invest more in compliance, AI ethics teams, governance frameworks, and regulatory engagement.

Investors are likely to increasingly assess AI companies not just on innovation and growth, but on regulatory resilience and reputational risk management.

For policymakers, Nadella’s message reinforces the push for structured AI regulation rather than reactive bans. Governments may accelerate standard-setting, certification models, and international AI governance cooperation.

Analysts warn that firms ignoring responsible AI principles risk facing stricter regulations, public backlash, and market exclusion in highly regulated regions.

Decision-makers should watch for coordinated industry frameworks on responsible AI, stronger government-industry collaboration, and the emergence of global AI governance standards. Over the next 12–24 months, AI leadership will increasingly be defined not just by model performance, but by trust, compliance, and social legitimacy. The AI race is shifting from raw capability to sustainable acceptance.

Source & Date

Source: The Times of India

Date: January 2026